Full-text search at Contentful just got faster: Why it’s important and how we did it

Full-text search in Contentful just got faster — we improved the median response time by 35%. This is the story of how we did it and why it's important.

Searching through mountains of content

We are surrounded by mountains of content. Every day, new blog posts, news articles, recipes, product reviews, and any other type of content you can think of are created. But being surrounded by mountains of content means you need a way to search through it and find just what you’re looking for.

Working within Contentful is no different. The content that you and your team creates increases day after day — and soon you might not be able to remember what content you’ve made. What was the title of that article about Swiss cows? "Why Swiss cows climb mountains" or "The Swiss cows love mountains?” You can only remember that it talked about "Swiss" and "cows,'' so that's what you type in the search box in Contentful's web app.

If the results to this search don't include the article you’re looking for, you will most likely try a few more times with different variations. Each of these attempts takes time as you wait for Contentful to return the results. The longer the wait, the more you will feel that your workflow is being interrupted. At Contentful, we pride ourselves on helping teams and individuals across the world be more productive. Quickly finding what you are looking for is crucial for productivity. So we gave some vitamins to our full-text search implementation to make it work better.

These performance improvements, along with our recently released tagging feature, enable editorial teams to stay productive as their content grows. Now you can quickly locate that article you need either by browsing your tag collection, by using a full-text search or by combining them.

The tech behind the scenes

Before we go into the details of what we did to improve performance, we have to first take a small detour and give you a lightweight introduction as to how we store data and how full-text search is implemented.

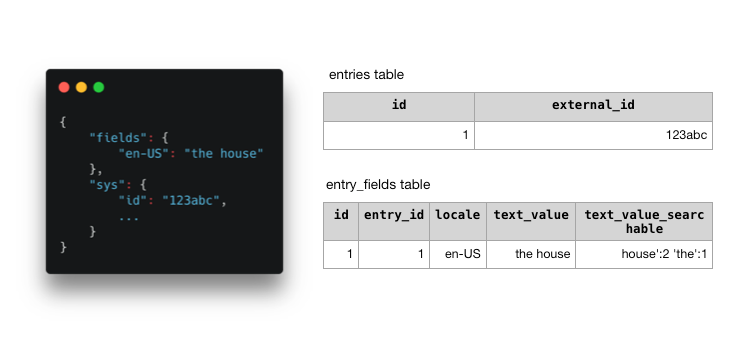

We use AWS RDS for Postgres as our database technology. The content entities objects (entries, assets and content types) are disassembled and stored in different tables in the database schema. As an example, we include a very simplified version of the tables that store entries (we have omitted multiple columns on both tables to have a simpler example).

When a customer creates or updates an entry, we split the entry JSON object and create or update the required rows in the entries and entry_fields tables. When the customer fetches an entry, we take the opposite direction and "re-assemble" the entry object from the rows in these two tables. For the purpose of this article we show the text_value_searchable and text_value columns of the entry_fields table. The text_value column contains the raw value of any Text, Symbol or RichText field and text_value_searchable stores the tsvector representation of the text_value. Postgres uses tsvectorss to perform full-text searches and by pre-computing it we save some CPU cycles and time on each full-text search.

Now that we have an idea of how data is stored in the database, we can move on and explain how full-text search was implemented before the changes we made. As we introduced in the post If you can’t find it, did it really happen? Contentful phrase-based, full-text search, for a full-text search the APIs will return all the entries where the search terms can be found on any of their fields on any of their locales. These search requirements are translated into SQL with one subselect for each of the search terms.

The SQL above would return all the rows from the entries table for which both full-text search subselects have a match (that is, entries where the words “Swiss” and “cows” can be found). With the returned rows we would assemble the entries and return them to the customer who made the search.

This finishes our quick intro of how your content is stored and how we did full-text search at Contentful. Let's talk about improvements now.

Input normalization

Those of you familiar with Postgresql might have noticed that in the previous SQL we didn't use plainto_tsquery to convert the search terms into a tsquery but instead use a custom function (cf_normalize_fts_input_string). This is because we have to apply a more elaborated process than just converting the search terms directly to tsquerys.

In Contentful, full-text search terms are left anchored. This means that when you, for example, search for “house,” we will convert it into the tsquery house:* (the :* is a wildcard suffix), which will match house as well as words like houses, household, housework, etc. For some inputs, plainto_tsquery would return tsquery values which we don't want to suffix with :* as they would result in a lot of unuseful matches. For example:

- For an input like “lo-master,” plainto_tsquery would return “lo” and “master.”

- For an input like “I'm,” plainto_tsquery would return “I” and “m,” or for Q&A “Q” and “A.”

If we added the wildcard suffix :* to tsqueryes like “lo”, “I” or “A,” we would match any word which begins with those prefixes and that's something we don't want because:

- When a user searches for “lo-master” they won't be interested in matches for local, low, long, etc.

- a:* would incur in a performance penalty. For such a term, there will be a lot of matches (think of all the words that can start with the letter A), which the database will have to process and filter before returning a result. This leads to longer response times.

To work around these issues, we had to do something else besides using plainto_tsquery. We call this input string normalization. The first version of it was an inline subselect where we used tsdebug to determine which lexemes made it to the returned tsquery. For example, for “lo-master” the returned tsquery would be “lo-master:*” and for “Q&A,” “q & a” (notice that this tsquery has no suffix and will only match a single q and a single a).

This solution worked well. It allowed us to reduce the number of false matches and gain some performance. It had, however, one small drawback that we only discovered some time later.

One of our customers searched for “Trump and Biden prepare for a second debate clash while the moderator prepares the mute button,” which resulted in 15 subselects (one per term in the input string), each of them with an inline normalization subselect. This query took more than 10 seconds to run and when we analyzed the query execution plan we noticed that the planner has picked the subselect with the term “and” to start the query execution. This subselect returned ~840K rows which would then be filtered up in the query plan.

It seemed strange that the database had chosen to start with a word with such high selectivity (i.e. that matches a high number of rows) instead of picking a different one. While this could have been a problem with the statistics themselves, we started playing with the inline subselect that does the string normalization. After some experimentation it was clear that the inline subselect was harming the ability of the database to pick the best plan. When Postgres' query planner sees a SQL like the one above it doesn't know what the output of those (SELECT /*normalize input string...*/ …) statements will be so it can't fully leverage its statistics to choose the most optimal plan.

Our solution to this problem was to move the input string normalization logic into a SQL function (cf_normalize_fts_input_string) and flag it as immutable parallel safe:

- inmutable to allow the planner to pre-evaluate the function and replace it with its result inline

- parallel safe to allow the planner to use parallelization when possible. Following is a bare-bones snippet of such a function.

After we rolled out this function, the search for “Trump and Biden prepare for a second debate clash while the moderator prepares the mute button” went from 10s to ~20ms because the planner started the query execution searching for rows where “biden:*” could be found, which resulted in only ~1.5K hits, a reduction of ~99% in the amount of rows to filter through in other plan nodes.

However, there's one drawback to this approach. Query planning gets slower because these functions have to be pre-evaluated. The more functions, the slower the planning gets. But all in all, this is a tradeoff that we are happy to pay because the overall results have been very positive.

Table normalization

If you recall, at the beginning of this post we showed a bare-bones version of our schema. In the entry_fields table the text_value column holds the value for any Text, Symbol or RichText field and the text_value_searchable column the tsvector calculated from the value in text_value.

We also showed a simplified version of the SQL we used to do full-text searches, with one subselect per full-text search term. Having one subselect per term didn't look very efficient to us but it was the only way to satisfy our search requirements: return all the entries where all the search terms can be found on any of their fields on any locale. After some experimentation, we decided that our best option was to combine all the text values of an entry for one locale in just one row, so we could avoid the one-subselect-per-search term and instead have just one where we searched for all the terms at once. In practical terms, this meant creating a new table to store the combined text values and adjusting the querying logic. The adjusted schema would look like this:

And the query would change from this:

To the one below. Notice that now there's only one subquery (instead of one per search term) which uses the entries_full_text_search table.

We noticed that new full-text searches using the new table were in some occasions slower than the original querying approach. After some investigation, we learned that doing a full-text search where multiple terms are combined (like “swiss:* & cows:*”) will be as slow as the slowest index scan for any of the terms in Postgres. In other words, when presented with a constraint like <column name> @@ '<term one> & <term two> & ... & <term n>', Postgres will do one index scan for each of the terms and then and, the result of each to return only the pages where all the terms can be found. The slowest scan will dominate the performance of the whole search. This was unfortunate for us, as it seemed that all our efforts would have been for nothing.

At that point we decided to try using the new table with the same querying strategy as before, that is, one subselect per search term. With that change, we returned to the planner a chance to order the subselects in the most optimal way. We started seeing improvements in the response times.

In this case, the improvement in response times wasn't only a function of the query plan ordering, but also of the nature of the new entries_full_text_search table. The table has one row per locale of the source entry, which contains the concatenation of all the text vectors for that locale in the entry. This means that the table is more compact than the main entry_fields table (where text values for the same entry and locale are spread in multiple rows, mixed with rows for non text values). This impacts the performance of the GIN index that we have on the table to speed up full-text searches:

- A smaller table means that Bitmap Scans on the index return less pages to be checked.

- Less pages to be checked means that Bitmap Heap Scans will be also faster as the requirements for IO will also be smaller.

As Contentful continues to grow in the number of customers, and the requirements of our customers grow, managing content becomes more and more important. Today’s solutions won’t always scale, and we’ll go back to the drawing board to design the next iteration of our systems. If this sounds interesting to you and you would like to be part of our team, go check out our careers page. We are hiring!