ARrrrg! How pirates boarded the Google AR Android Demo Application

When DevFest Hamburg asked me to give a talk last November, I knew immediately that I wanted to do something that would inspire people to work with augmented reality (AR). From there, it went something like this: Hamburg is known for its harbors, harbors have ships, ships are home to pirates (which I love), and pirates say arrrg… which has AR in it... ARrrg. Get it?

Plus the thought of was too tempting to resist.

This post will take you on a journey through the land of AR, Java, and pirates. I’ll introduce Google’s ARCore and walk through how I altered it to fit my personal use case: taking an unlimited number of action figures with me while I travel, without actually packing anything.

But before we get going, put on your headphones and play our pirate-approved soundtrack.

Introduction to the ARCore

(Or arrrrrr core, scurvy dog! ⚓)

ARCore is the Augmented Reality library from Google. It runs on Android phones (but only the top notch ones like Google Pixel, Pixel 2, and Samsung Galaxy S8) and the library allows you to make use of horizontal planes inside AR apps. By using the phone’s sensors and camera, the library can find so-called feature points – meaning visually significant points in the image like edges or corners – and track them over time.

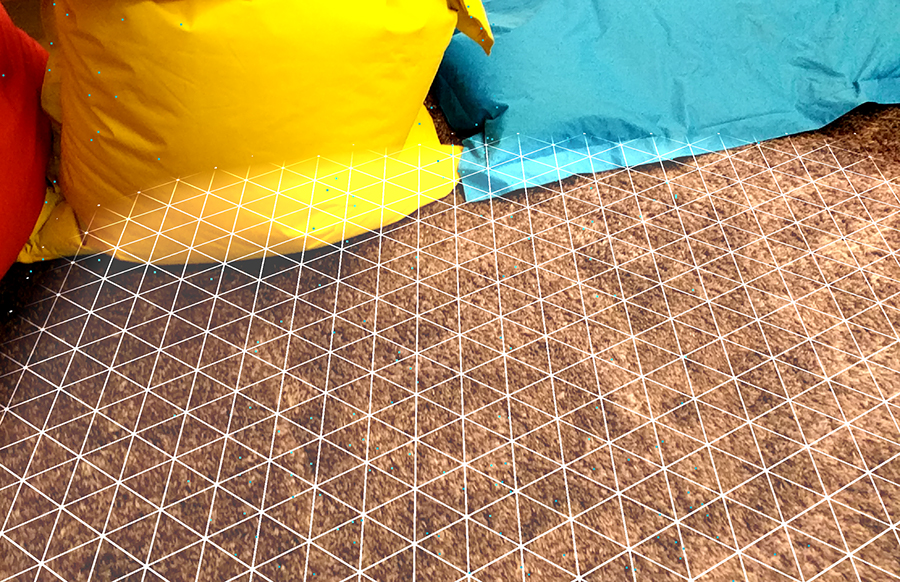

Once enough feature points have been found, it tries to fit a horizontal three-dimensional plane spanned over those points. As soon as ARCore considers the horizontal plane to be good enough, it exposes it to the user so they can then place objects on it or analyze it further. The following images show an example of this horizontal plane, taken from Google AR’s demo app

See the green dots in the upper lefthand corner on the beanbags? Those are feature points tracked through movement and lighting. The white mesh is a plane fitted from the feature points

Objects, or in this case Androids, are placed on the aforementioned plane and are stable throughout movement.

Additionally, ARCore does add the functionality to estimate global lighting conditions in the scene, as seen by the camera. This means that a programmer can use this to dim the objects displayed in AR to closer match the reality

The code behind the ARCore Demo App

(Yo ho ho, A pirate on the lookout 🔎)

Now that we have a general understanding of the what the ARCore does, let’s take a look at the underlying the code and folder structure

As you can see, the folder structure is logically separated. There is the HelloArActivity.java, which from the name alone, we can assume is used as an entry point. Additionally, we find a rendering package containing different renderers who, queuing from the name again, seem to be rendering the nice things we see on our phone (like the feature points, the point cloud renderer for objects, and so on).

We can also tell that a dependency was included in this repo: obj-0.2.1.jar, found in its lib folder. This is odd, especially since the same version can be found the usual way of using dependency management in Android: Gradle.

If you include the following snippet in your main gradle.build file, then you won’t have to manage another library file in your project.

This library is awesome because it allows you to load any model presented in the obj format. It even can format the data so that the OpenGL rendering library can use it without any pain. So including this library removes a lot of complexity and is a great addition to any project rendering AR content on Android.

There is another thing I'd like to point out with the code: The HelloArActivity. This is nice because it's a rather small Android Activity with only 300 lines of code — but sadly, almost 100 lines of it are used by only one method: onDraw. This method is called whenever the Android system tells the app to redraw all of the AR objects on the screen, which is roughly 60 times per second.

In addition to the complexity of this huge method, it also does a lot of things at once. It renders the virtual objects, updates the prediction of the ARCore's understanding of reality, takes a look on whether a tap occurred on the fitted plane, and if so, adds a new renderer to be rendered. On top of that, there is a big try{}catch block around the complete method, ignoring all exceptions that are potentially happening.

This feels like some bad practice — plus the fact that we’re only able to add Androids to our reality is pretty limiting. I'm not saying that there's anything wrong with Androids, but I’d love to be able to take more figurines with me on my journey. So I took a shot at adding to this existing demo.

The pirates are aboard

(Conquer the land lovers 🏖️)

By adding on top of the existing ARCore demo, I wanted to extend its existing use and connect me with my virtual figurines while traveling. To begin with, I updated the icon to something way more my style

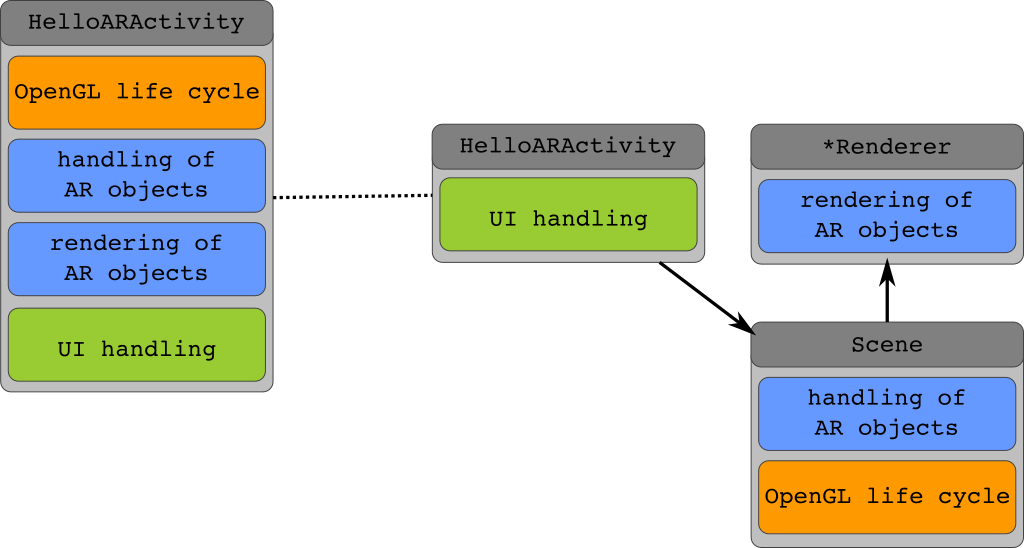

From there, I split up the HelloArActivity. The following graph shows this logic, but I mostly just created a simple Scene Graph. The renderers are now arranged in a class called Scene, which takes over the lifecycle of OpenGL. This limits the main activity to only taking care of redirecting the right calls to the scene, rendering the user interface (UI), and informing the scene about taps on the screen. The scene, in turn, redirects rendering calls to the renderers and also tells ARCore to update its predictions

You can find the commit for this process on my Pirate AR GitHub repo.

Before, the ARCore demo only used the .obj files and textures stored in the Android package (apk), so adding different sources was next to impossible. But now, we’re able to render several objects. With the changes introduced, all assets (textures, models, shaders, etc.) are loaded from our external storage. This means that different subsystems can also contribute new objects. So there’s nothing standing in the way of adding more objects

Andy the Android, Conner Contentful, and Ara the AR Animal are now happily combined into one AR scene.

Working with XML layouts in AR

(Avast ye, a pirate flag in the wind 🏴)

Another difference between the ARCore Demo and my Pirate AR app is that the Pirate AR can render arbitrary Android XML layouts into the AR. This way you can create text, images, sliders and so on, and then render them in AR. The process is simple: In the augmented reality we’ll get a plane placed on top of the fitted plane. The placed plane consists of only four corner points, and the magic of translating an XML layout into a texture is handled by creating the layouts view in Android and rendering this view into a canvas. Then it is saved into a bitmap, and this bitmap can be used as a texture on top of the plane in AR.

Here’s a glimpse of what it looks like (alongside some of our other objects):

Generating the texture from the XML layout can be done in four steps:

First, let's get the size of the screen, so we can create a texture that’s the same size. If you want to optimize, you can consider taking a power of two texture and reducing the pixel amount to improve performance—since npot, or not power of two, textures can limit performance:

Now, let’s create a bitmap to store the rendered layout:

After creating the canvas, we can render the content to it. So next, we’ll create a view of the correct size:

Did you notice the view.measure and view.layout? They are part of the view lifecycle and will ensure that the right measurements are used. If the layout is not rendered correctly, make sure that you have used both methods in succession (and in the right order).

Lastly, we’ll draw the layout view onto the canvas—filling the bitmap with the contents of the view, so we can use it as a texture:

Summary

(Payday, got our loot 💰)

Throughout this post, we’ve discussed the current features of ARCore by running through it's initial demo. After dissecting what we wanted to change, we moved onto the Pirate AR app. While it has the skeleton of the original demo from Google, the Pirate AR app has been altered to incorporate more flexibility—including rendering different objects and adding a feature of adding layout rendering.

For the source code, you can find both the original Google AR demo and my customized Pirate AR app on GitHub. If you’re interested in building your own apps, I’d recommend starting with this ARCore guide from Google Developers.

Are you an Android developer? We have an Android SDK!